Ph.Deez nutz.

I have friends who actually have a Ph.D. It takes many years to get one and an attempt to actually better a field. People tend to trust your opinion on a subject when you have a doctorate in that field.

I can’t even trust ChatGPT to answer a basic question without fucking up and apologizing to me, only to fuck up again.

Maybe stop treating language models like AGI? They’re awesome at recognizing semantic similarities between words and phrases (embeddings) as well as generating arbitrary but reasonable looking output that matches an expected output (structured outputs). That’s cool enough. Stop pretending like it isn’t and falsely advertising it as being able to cure cancer and world hunger, especially when you wouldn’t even be happy if it did.

AI as it sits is a tool that has specific use cases. It is absolutely not intelligence, as it’s commonly marketed. It may seem intelligent to the uninformed, but boy howdy is that a mistake.

It’s a sad reflection of our current state when being able to string together coherent sentences is impressive enough to many as to be confused with truth and/or intelligence.

It wasn’t that long ago that it was unfathomable for anything other than humans to be able to do this.

“Polly want a cracker” has been around since before anyone alive today was born, and that’s the same thing as what LLMs are doing in essence (mimicking human speech), but no one was taking advice from parrots.

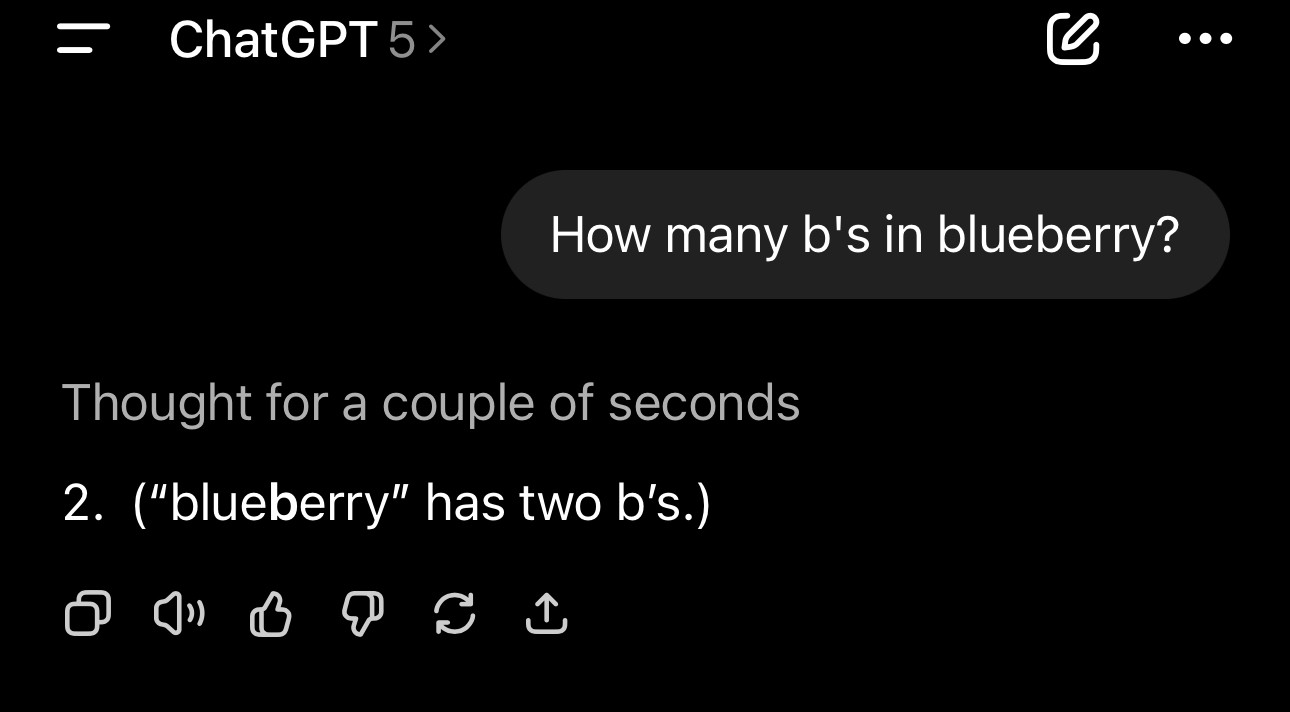

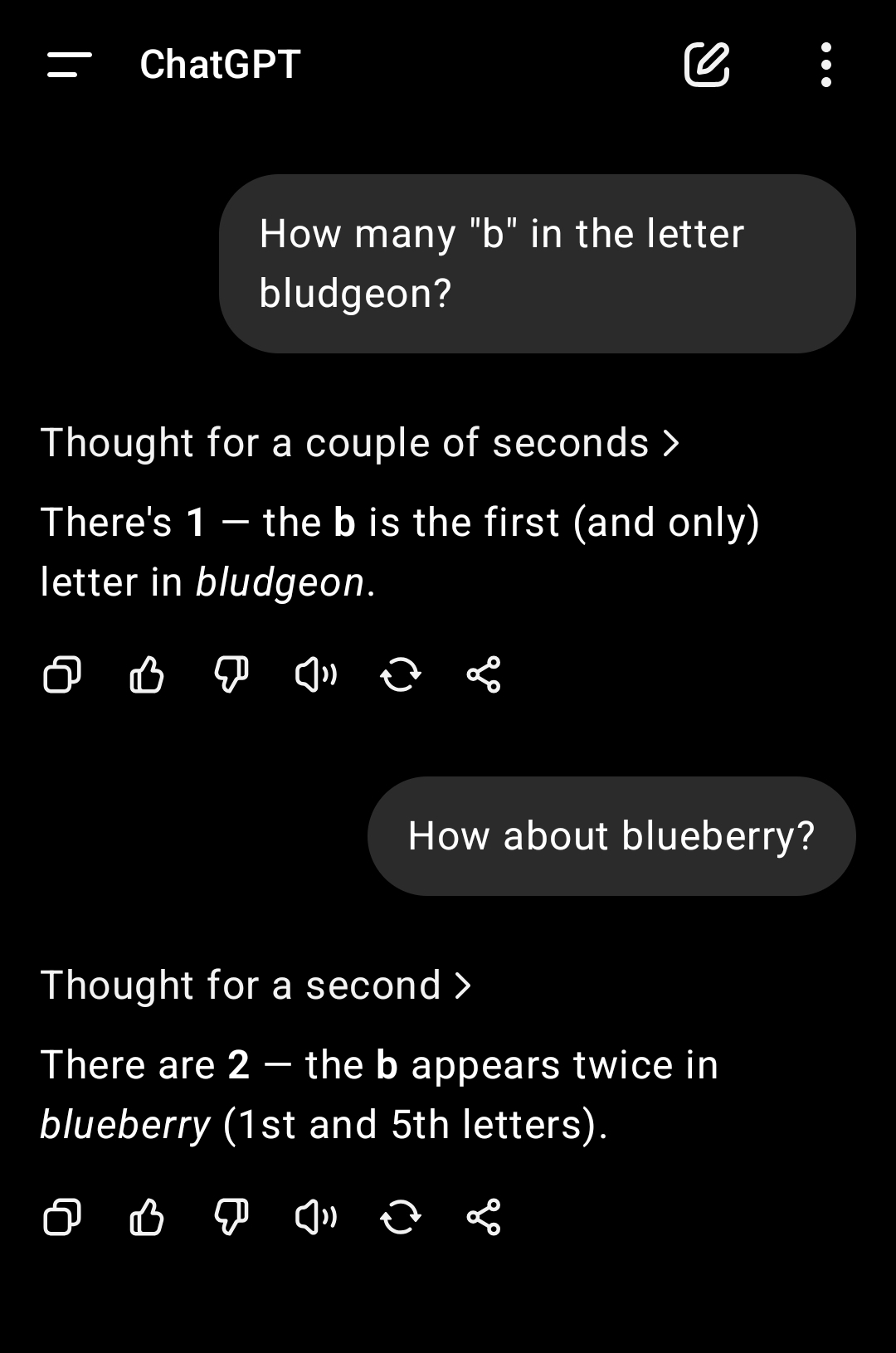

If I asked a PhD, “How many Bs are there in the word ‘blueberry’?” They’d call an ambulance for my obvious, severe concussion. They wouldn’t answer, “There are three Bs in the word blueberry! I know, it’s super tricky!”

I don’t feel this is a good example of why LLMs shouldn’t be treated like PhDs.

My first interactions with gpt5 have been pretty awful, and I’d test it but it’s not available to me anymore

Edit: I am not having a stroke, I’m bad at typing and autocorrect hates me

Do you smell toast?

BlackBerry toast

FWIW, ChatGPT 5 gets this correct

Fuckin’ does it?

It did for me 🤷🏻♂️

You appear to be using the older gpt model. The newer model calculates and answers correctly for most words at least for the few I asked

LLMs are fundamentally unsuitable for character counting on account of how they ‘see’ the world - as a sequence of tokens, which can split words in non-intuitive ways.

Regular programs already excel at counting characters in words, and LLMs can be used to generate such programs with ease.

But they don’t recognize their inadequacies, instead spouting confident misinformation

This is true. They do not think, because they are next token predictors, not brains.

Having this in mind, you can still harness a few usable properties from them. Nothing like the kind of hype the techbros and VCs imagine, but a few moderately beneficial use-cases exist.

Without a doubt. But PhD level thinking requires a kind of introspection that LLMs (currently) just don’t have. And the letter counting thing is a funny example of that inaccuracy

The tokenization is a low-level implementation detail, it shouldn’t affect an LLM’s ability to do basic reasoning. We don’t do arithmetic by counting how many neurons we can feel firing in our brain, we have higher level concepts of numbers, and LLMs are supposed to have something similar. Plus, in the “”“thinking”“” models, you’ll see them break up words into individual letters or even write them out in a numbered list, which should break the tokens up into individual letters as well.

ChatGPT in its PhD thesis defense: “Oh, I’m sorry for the misinformation, let me try this again…”

LOL!! 🤣 Yes! This exactly!

Just Conmen selling their snake oil

Have to agree with this. My experience with the various AI models is that they’re fairly terrible. I really don’t want to see this garbage driving cars where lives are at stake.

I could power a data center with the rolling of my eyes after reading this headline.

🎓 PhB level checks out.

🚫 Blockchain level uselessness and waste as well.🎈📌💥

Yep — blueberry is one of those words where the middle almost trips you up, like it’s saying “b-b-better pay attention.”

… I hate this technology so fucking much…

Also, it trying to gaslight you into believing bluebberry is real was very funny.

Well, it answers correctly in my case.

“It can now drive its users straight into an active psychosis 35% faster by sounding more persuasive than ever before!”

OpenAI claims a lot of things.

I mean, that doesn’t really mean much, given that you don’t have to be very intelligent to get one. It’s mostly an endurance exercise and often a test how much frustration and uncertainty you can take in your life.

This guy always shows up with his hands like this in news photos

I know it’s irrelevant but I had to point it out

How many ChatGPhDs will it take to do the math on how long it is until this bubble pops?

Oops i ate the onion.

Right? No way thats considered a legitimate argument since a PhD just says you dedicated yourself to a very specific topic and arent necessarily smarter or better spoken for it.

Or is he just bragging he found a way to filter it to just people’s PhD thesis papers that they stole?Maybe a PhD in civil engineering lol.

Shouldn’t be hard to improve over this rubbish:

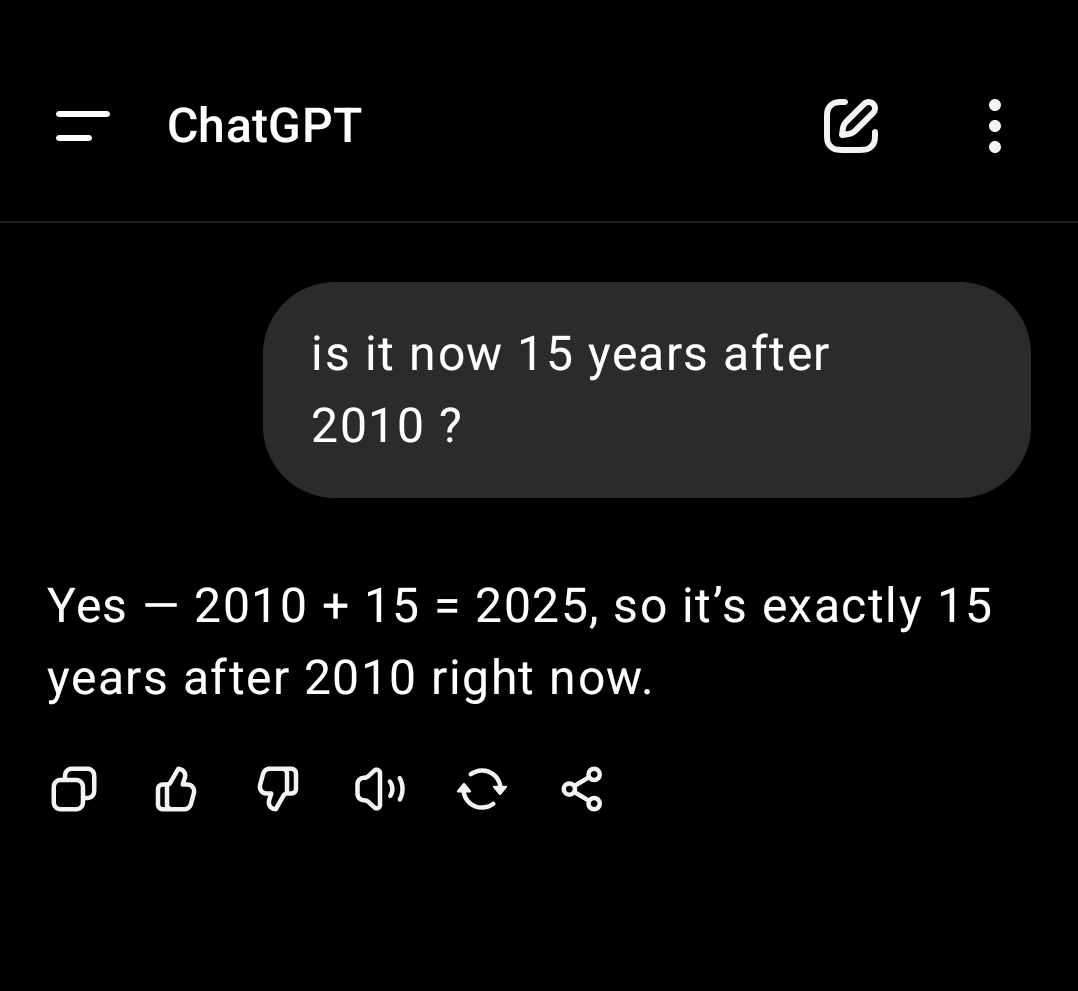

is it now 15 years after 2010 ?

GPT-4 No, it is not 15 years after 2010. As of today, August 8, 2025, it is 15 years after 2010.>

The gpt-5 model answers this correctly.