6·

2 years agoI expect so, but as we start to get more agents capable of doing jobs without the hand holding, there are some jobs where time isn’t as important as ability. You could potentially run a very powerful model on a GPU with 24GB of memory.

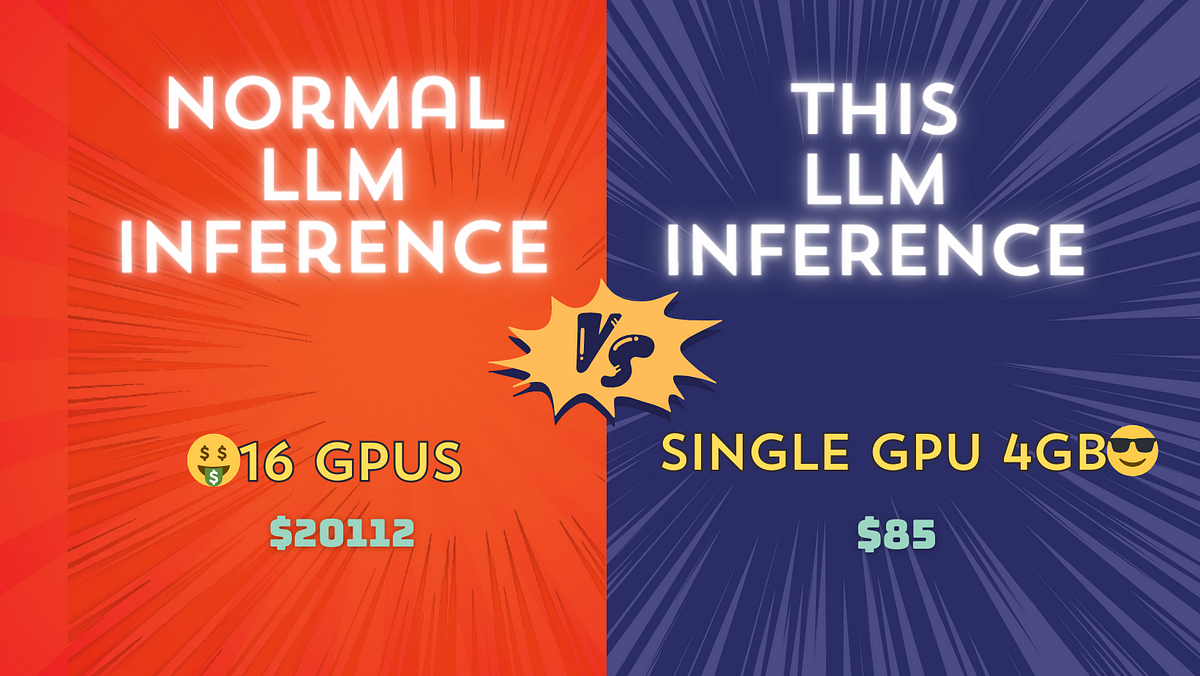

Yeah, I’m not sure how they get that, but maybe, if you’re wanting to run a model in-house, as many people would prefer, you can then run much more capable models on consumer grade hardware and make savings there compared to requiring the more expensive kit. Many would already have decent hardware, and this extends what they can run before needing to fork out for new hardware.

I know, I’m guessing.