Just be aware that W11 is secure boot only.

There is a lot of ambiguous nonsense about this subject by people that lack a fundamental understanding of secure boot. Secure Boot, is not supported by Linux at all. It is part of systems distros build outside of the kernel. These are different for various distros. Fedora does it best IMO, but Ubuntu has an advanced system too. Gentoo has tutorial information about how to setup the system properly yourself.

The US government also has a handy PDF about setting up secure boot properly. This subject is somewhat complicated by the fact the UEFI bootloader graphical interface standard is only a reference implementation, with no guarantee that it is fully implemented, (especially the case in consumer grade hardware). Last I checked, Gentoo has the only tutorial guide about how to use an application called Keytool to boot directly into the UEFI system, bypassing the GUI implemented on your hardware, and where you are able to set your own keys manually.

If you choose to try this, some guides will suggest using a better encryption key than the default. The worst that can happen is that the new keys will get rejected and a default will be refreshed. It may seem like your system does not support custom keys. Be sure to try again with the default for UEFI in your bootloader GUI implementation. If it still does not work, you must use Keytool.

The TPM module is a small physical hardware chip. Inside there is a register that has a secret hardware encryption key hard coded. This secret key is never accessible in software. Instead, this key is used to encrypt new keys, and hash against those keys to verify that whatever software package is untampered with, and to decrypt information outside of the rest of the system using Direct Memory Access (DMA), as in DRAM/system memory. This effectively means some piece of software is able to create secure connections to the outside world using encrypted communications that cannot be read by anything else running on your system.

As a more tangible example, Google Pixel phones are the only ones with a TPM chip. This TPM chip is how and why Graphene OS exists. They leverage the TPM chip to encrypt the device operating system that can be verified, and they create the secure encrypted communication path to manage Over The Air software updates automatically.

There are multiple Keys in your UEFI bootloader on your computer. The main key is by the hardware manufacturer. Anyone with this key is able to change all software from UEFI down in your device. These occasionally get leaked or compromised too, and often the issue is never resolved. It is up to you to monitor and update… - as insane as it sounds.

The next level key below, is the package key for an operating system. It cannot alter UEFI software, but does control anything that boots after. This is typically where the Microsoft key is the default. It means they effectively control what operating system boots. Microsoft has issued what are called shim keys to Ubuntu and Fedora. Last I heard, these keys expired in October 2025 and had to be refreshed or may not have been reissued by M$. This shim was like a pass for these two distros to work under the M$ PKey. In other words, vanilla Ubuntu and Fedora Workstation could just work with Secure Boot enabled.

All issues in this space have nothing to do with where you put the operating systems on your drives. Stating nonsense about dual booting a partition is the stupid ambiguous misinformation that causes all of the problems. It is irrelevant where the operating systems are placed. Your specific bootloader implementation may be optimised to boot faster by jumping into the first one it finds. That is not the correct way for secure boot to work. It is supposed to check for any bootable code and deplete anything without a signed encryption key. People that do not understand this system, are playing a game of Russian Roulette. There one drive may get registered first in UEFI 99% of the time due to physical hardware PCB design and layout. That one time some random power quality issue shows up due to a power transient or whatnot, suddenly their OS boot entry is deleted.

The main key, and package keys are the encryption key owners of your hardware. People can literally use these to log into your machine if they have access to these keys. They can install or remove software from this interface. You have the right to take ownership of your machine by setting these yourself. You can set the main key, then you can use the Microsoft system online to get a new package key to run W10 w/SB or W11. You can sign any distro or other bootable code with your main key. Other than the issue of one of the default keys from the manufacturer or Microsoft getting compromised, I think the only vulnerabilities that secure boot protects against are physical access based attacks in terms of 3rd party issues. The system places a lot of trust in the manufacturer and Microsoft, and they are the owners of the hardware that are able to lock you out of, surveil, or theoretically exploit you with stalkerware. In practice, these connections are still using DNS on your network. If you have not disabled or blocked ECH like cloudflare-ech.com, I believe it is possible for a server to make an ECH connection and then create a side channel connection that would not show up on your network at all. Theoretically, I believe Microsoft could use their PKey on your hardware to connect to your hardware through ECH after your machine connects to any of their infrastructure.

Then the TMP chip becomes insidious and has the potential to create a surveillance state, as it can be used to further encrypt communications. The underlying hardware in all modern computers has another secret operating system too, so it does not need to cross your machine. For Intel, this system is call the Management Engine. In AMD it is the Platform Security Processor. In ARM it is called TrustZone.

Anyways, all of that is why it is why the Linux kernel does not directly support secure boot, the broader machinery, and the abstracted broader implications of why it matters.

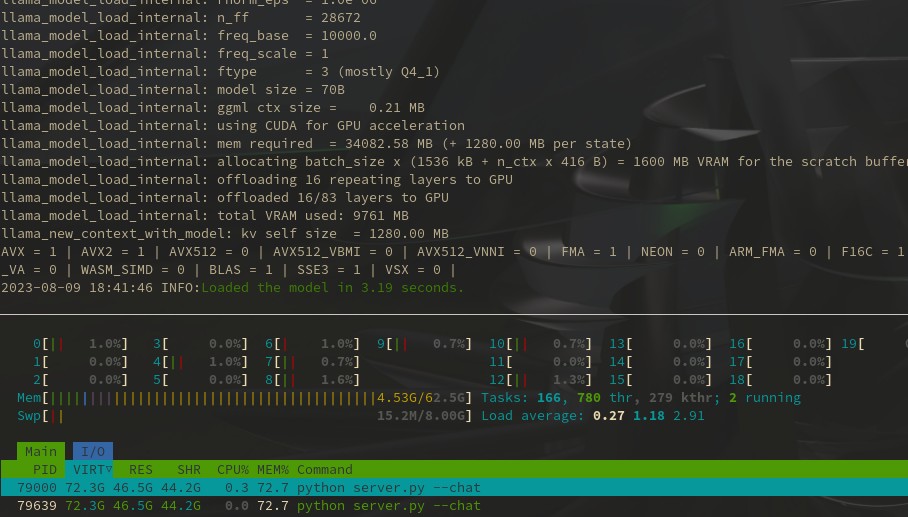

I have a dual boot w11 partition on the same drive with secure boot and have had this for the last 2 years without ever having an issue. It is practically required to do this if you want to run CUDA stuff. I recommend owning your own hardware whenever possible.

I have never used or cared about this W11. It has never seen the internet. I only keep it around for my keyboard’s RGB controller app if I ever need it. So I have no clue if this is everything or whatnot, but that is a screenshot of my access to the windows file system from within the file manager of Fedora. That is a dual boot partition. Fedora is particularly good at coexisting with a dual boot partition.