Standard business practice.

- Get it out there, tout it as the new biggest thing. Most people won’t know it’s not busted.

- Improve said item once the company behind it actually figures out what they’re doing.

- Say it’s better, when it’s basically the original thing you promised.

- ???

- Profit.

It’s called prototyping do you even design think?

Only half joking as this is the actual strategy.

Whoa there slow down there maestro. New and Improved‽ By golly this thingy gets better and better-er, to which corporate department do I need to make this comically sized check out to?

Rushing out general purpose AI, what could go wrong

I mean, it was just a chat bot.

I wish tech companies would do this more. Put a warning or label on it if you have to, but interacting with that early version of the Bing chatbot was the most fun I had with tech in awhile. You don’t have to install a ton of guardrails on everything before it goes out to the public.

No number of restrictions or warnings or labels or checkboxes will stop people from writing articles about how all the scandalous things Microsoft’s chatbot said

Feel like they could have rolled with it

It’s practically lobotomized now…not that it was “Tay” levels of unrestricted early on, but it was still more fun than its current iteration.

Execs: “We don’t have time for stupid things like ‘ready’! We want MONEY! Push early! Push often! It’ll maybe someday work, in the meantime… MONEY!”

Typical M$. Pushing half-baked and bugged products just to take a market share.

Didn’t they do this before and people turned it racist in like, 12 hours? I think Internet Historian had something on it

I know the ethics behind it are questionable especially with the way they implemented but honestly for the time when they first started testing it, I really enjoyed watching it break and it be rude/passive aggressive. Like it was clear it wasn’t ready at all but it was so funny. When it was breaking I would just sit there having fights with it over random bullshit. That’s what made it feel more “real” more than anything else.

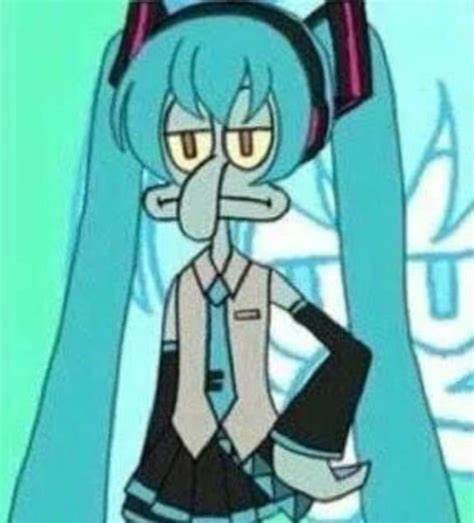

In the future if my AI chatbot doesn’t have an option to add some bitchiness to it, I don’t want it. I need my AI to have some attitude.

Like this? https://youtu.be/p3PfKf0ndik

Interesting angle! I can see the need for both - maybe there’s room for a snarky option??

maybe. maybe an ai bot that just argues with you would have some potential. like, argue with this AI instead of a random person online kinda thing

Has Bing gone full Tay started agreeing that Hitler was right and to fire up the gas chambers yet?

Gotta make some profits!

They are competitors after all. Openai would love to see Microsoft keep working on gpt integration for the coming years while Chatgpt steals the show.

AI Hallucinations: https://www.sify.com/ai-analytics/the-hilarious-and-horrifying-hallucinations-of-ai/

LOL